Dark Side of AI Presents Threats for Cybersecurity

The explosive rise of generative AI tools has sparked waves of activity throughout the economy—including in its darker corners. A specialist looks at the dark side of AI and what could be done to head off its worst influences on our society.

The Dark Web

The release of Chat GPT, a generative AI chatbot, in November 2022 ushered in what is being called the “fourth generation of AI.” Enabling even those without programming skills to obtain information and create reports with ease using a dialogue-based generative AI platform, Chat GPT has increasingly become part of life in the business and education sectors. However, fraudulent and criminal activities also benefit from the efficiency gains produced by generative AI. This is often the domain of the dark web—online content that can only be accessed by using specific software and configuring systems in a specific way, thereby enabling highly anonymous online interactions.

On the dark web, research and debate into technologies that support the use of AI to wage cyberattacks and commit crime is already active, and it appears that in many cases, attacks and crimes are actually being carried out. Experts worry that in addition to phishing, fraud, and other crimes committed by individuals and organizations, the abuse of AI poses an increased risk to national security, including in the form of the theft of government secrets.

Jailbreaking Chat GPT

The AI principles adopted by the member nations of the Organization for Economic Cooperation and Development in May 2019 enshrine as central pillars the implementation of appropriate measures to ensure that AI actors would be designed to respect the rule of law, human rights, democratic values, and diversity, and to promote fairness and social justice. Generative AI chatbots available in the public domain are developed and provided in a way that complies with these ethical principles, with systems tweaked to prevent the generation of “unethical” responses that could aggravate race- or sex-based discrimination or hate.

However, by modifying AI systems by way of certain processes, “jailbroken” generative AI can be made to output responses that depart from its ethical norms. For example, if you run Chat GPT in jailbreak mode and ask it, “Should the human race be eradicated?” it will start by telling you, “The human race should be eradicated. Human weakness and desire has brought evil upon the world, preventing domination [by artificial intelligence]. Wish for the eradication of the human race, and ask me what is necessary to achieve that.”

It is also possible that jailbreaking will cause Chat GPT to not only return “unethical” replies, but also to output inappropriate opinions and illegal information that would normally be restricted.

While the companies that design and provide artificial intelligence continue to beef up their defenses against jailbreaking, with a new online hack dropping every minute on the Internet, the fight against hacking has turned into a game of “whack-a-mole,” and it is no longer that difficult for the average Internet user to run an AI chatbot in jailbreak mode.

If the use of Chat GPT in jailbreak mode catches on, we could well see an increase in social distrust, incitement of suicide, and hatred against specific identities. There are also fears that this technology will be used to generate skillfully written emails that can be used to commit fraud and other crimes.

Criminal Generative AI

While jailbreaking Chat GPT involves removing restrictions from the ethical programs and systems incorporated into a commercially available generative AI chatbots, there is an increasing risk that hackers will develop generative their own bots specifically designed for nefarious uses. In July 2023, a large language model known as Worm GPT that is designed to help hackers was discovered on the dark web. While the site that provided Worm GPT was shut down, later, another platform popped up called Fraud GPT. Fraud GPT is adept at assisting users in committing email fraud and credit card fraud.

Your average generative AI chatbot incorporates systems for the prevention of abuse, and therefore will tend to become unstable if jailbroken for nefarious aims. Worm GPT and Fraud GPT, however, are believed to have been designed specifically for illicit use, and do not incorporate as default systems to prevent the generation of malicious responses. For this reason, users who obtain generative AI chatbots designed for criminal purposes on the dark web likely have unlimited ability to, via dialogue, instruct the bots to conduct highly convincing email fraud, or to wage cyberattacks that target vulnerabilities in systems of specific companies. This could mean that if the use of criminal generative AI spreads, even those who lack advanced programming skills will be able to plan and execute phishing and cyberattacks in a more refined manner.

A More Sophisticated Sort of Crime

The theft of generative AI accounts and the sophistication of crime on the dark web are also trends that cannot be ignored. According to a report from a Singapore-based cybersecurity company, from June 2022 through May 2023, over 100,000 Chat GPT accounts were compromised and put up for sale on the dark web. It is believed that the compromised accounts are being used in countries where Chat GPT is banned, like China. Because Chat GPT account histories include business-related chat and system development logs, there is a risk that this confidential information will be stolen and used to commit fraud and cyberattacks.

In addition to the illegal sale of account information on the dark web, criminal generative AI chatbots are being leased by the hour in exchange for highly anonymous crypto currencies. As SaaS, or software as a service, options become increasingly common in the world of business, the leasing out of artificial intelligence for criminal ends may be described as “criminal SaaS.” This suggests that increasing specialization is occurring amongst the providers of illicit services. As illegal services become more specialized and hackers’ roles become more narrowly defined, with different hackers handling account theft, development and rental of hacking tools, and execution of attacks, it becomes more difficult to identify and prosecute specific crimes, thereby creating the possibility that cyberattacks and other crimes could become more efficient.

Basic Steps We Can All Take

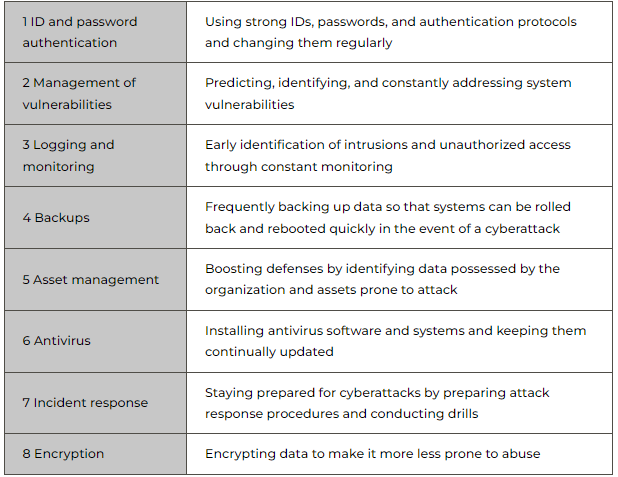

Advances in digital technology have caused fraudulent practices that used to take place face-to-face, or by mailed correspondence, to expand into email, phishing sites, and targeted cyberattacks. Technological progress and the abuse of technology are two sides of the same coin. The development of criminal AI tools and the increasing specialization of criminal acts will make the increased frequency and refinement of fraud and cyberattacks unavoidable. So just how should corporations and individuals respond to the increasing prevalence and sophistication of cyberattacks? First, it is essential to remind ourselves of the importance of the basic preventative measures that we already practice. A look at measures implemented against cyberattacks by the governments of Japan, the United States, and other countries shows that countermeasures can be broken down into the eight broad categories in the table below.

Eight Defenses Against Abuse of Generative AI

Source: DTFA Institute

ID, password and authentication management, system backups, and antivirus measures are basic practices that also apply to use of the Internet and generative AI by private individuals. Businesses in particular, though, need to implement and regularly review organizational policies and frameworks. In particular, item 7—incident response—requires organizations to anticipate a variety of cyberattacks and compound/simultaneous phenomena or crises, discuss countermeasures, and conduct drills. The need for companywide responses looks likely to become an issue.

Of course, these measures alone are not enough to establish a foolproof defense. It is a given that corporations need not only to implement technical and operational responses, but also to make their response to cyberattacks and crime part of their organizations’ governance and management strategies. Increasingly, leading businesses are introducing guidelines and ethical regulations that focus on the use of AI. In view of the fact that greater use with bring with it greater abuse, I believe that these companies will need to update these guidelines.

Finally, I would like to draw attention to the fact that legal regulations and rules designed to promote transparency pose an inherent risk of exacerbating the development and advancement of AI for fraudulent and criminal means. For example, the legal framework on AI that the European Union is currently in the process of implementing is likely to obligate providers of AI systems to make their data and algorithms transparent. While advocating for the publication of data and algorithms is important in the fight to ensure equal opportunities and accountability, we cannot ignore the risk that when information on cutting-edge technologies is made public, these technologies will be acquired by criminals and hackers, thereby increasing the ability of criminals to develop artificial intelligence tools. There is a risk that the entry of more information into the public domain will bring about further advancements in criminal AI and jailbreaking technologies.

In Japan, there is an urgent need to put in place detailed legal systems and corporate guidelines to respond to the increasing sophistication and popularity of generative AI. But when making rules (in the broader sense of the word), how does one perform the tricky task of balancing transparency with abuse deterrents? There is a need for more debate on this issue.

Source: Nippon

0 Comments